Finally, I want to introduce Convolutional Neural Networks (CNNs). The reason for this is that CNNs involve a lot of mathematical concepts, which can make them quite cumbersome if you try to implement them from scratch using only Numpy. However, if you just want to understand the basic intuition behind CNNs, the TensorFlow framework makes it incredibly easy and straightforward.

I plan to explain how to integrate CNNs into our previously built neural network framework in a non-starred section, while also showing how to build a CNN from scratch using only Numpy in a starred chapter. The starred sections are meant to be more challenging, so the audience can enjoy the non-starred chapters with a relaxed and happy mindset.

This chapter will focus on some fundamental concepts of CNNs. First, I want to clarify that structurally, a simple CNN is not much different from a standard neural network (though complex CNNs may have significant differences). This means that a basic CNN consists of only two things:

- A hierarchical layer structure

- A network structure that integrates these layers

So, when implementing the algorithm, we’re essentially just working on the same parts as in our previous neural network implementation.

After understanding the structure, we need to look at the core idea behind CNNs. In general, it can be summarized into two main principles:

- Local connection (Sparse connectivity)

- Weight sharing

These ideas are quite intuitive. For example, when we look at a scene, we don’t process the whole image at once. Instead, we focus on pieces of it, receiving information in “chunks†(known as the “local receptive fieldâ€). During this process, our thoughts remain consistent, and after observing the scene, we might feel that “the scenery is beautiful.†Then, we adjust our thoughts based on that feeling. In this analogy, each “piece†of the scenery represents a local connection, and our thoughts represent the weights. When we look at the scenery, we use the same set of thoughts across different regions—this reflects the biological concept of weight sharing. (Note: This analogy was created by me, and I can't guarantee its academic rigor. I encourage readers to approach it critically. If experts find any inaccuracies, I hope they’ll forgive me with a smile.)

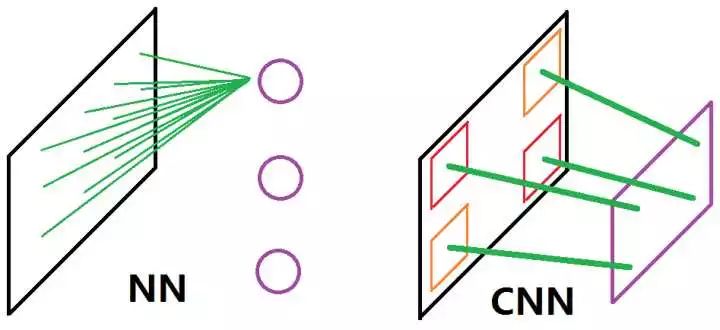

Textual explanations can still feel abstract. To help clarify, I’ve drawn a diagram (based on a reference image, but I've modified it to avoid confusion):

This image highlights the difference between traditional neural networks (NN) and CNNs. On the left, the NN is fully connected, meaning it uses a global receptive field, where each neuron is independent. This makes it difficult to interpret raw data as meaningful patterns. On the right, the CNN uses a local receptive field combined with shared weights. Each neuron "sees" a small part of the input, and the same weights are used across different regions, making the model more efficient and better at capturing spatial hierarchies.

Next, I'll explain how to implement this idea, specifically the convolution operation in CNNs. The exact mathematical details will be covered in the math series, but here’s a glimpse of the code (again, thanks to TensorFlow!):

```python

def _conv(self, x, w):

return tf.nn.conv2d(x, w, strides=[self._stride] * 4, padding=self._pad_flag)

def _activate(self, x, w, bias, predict):

res = self._conv(x, w) + bias

return layer._activate(self, res, predict)

```

This code involves concepts that will be explained in detail later, but if you understand the basics, it should be fairly readable.

70W Medical Power Supply,70W Medical Device Power Supply,70W Medical Power Adapter,70W Rade Power Supplies

Shenzhen Longxc Power Supply Co., Ltd , https://www.longxcpower.com